Virtual reality has seen enormous progress in the past few years. Given its recent surges in development, it may come as a bit of a surprise to learn that the ideas underpinning what we now call VR were laid way back in the 60s. Not all of the imagined possibilities have come to pass, but we’ve learned plenty about what is (and isn’t) important for a compelling VR experience, and gained insights as to what might happen next.

If virtual reality’s best ideas came from the 60s, what were they, and how did they turn out?

Interaction and Simulation

First, I want to briefly cover two important precursors to what we think of as VR: interaction and simulation. Prior to the 1960s, state of the art examples for both were the Link Trainer and Sensorama.

The Link Trainer was an early kind of flight simulator, and its goal was to deliver realistic instrumentation and force feedback on aircraft flight controls. This allowed a student to safely gain an understanding of different flying conditions, despite not actually experiencing them. The Link Trainer did not simulate any other part of the flying experience, but its success showed how feedback and interactivity — even if artificial and limited in nature — could allow a person to gain a “feel” for forces that were not actually present.

Sensorama was a specialized pod that played short films in stereoscopic 3D while synchronized to fans, odor emitters, a motorized chair, and stereo sound. It was a serious effort at engaging a user’s senses in a way intended to simulate an environment. But being a pre-recorded experience, it was passive in nature, with no interactive elements.

Combining interaction with simulation effectively had to wait until the 60s, when the digital revolution and computers provided the right tools.

The Ultimate Display

In 1965 Ivan Sutherland, a computer scientist, authored an essay entitled The Ultimate Display (PDF) in which he laid out ideas far beyond what was possible with the technology of the time. One might expect The Ultimate Display to be a long document. It is not. It is barely two pages, and most of the first page is musings on burgeoning interactive computer input methods of the 60s.

The second part is where it gets interesting, as Sutherland shares the future he sees for computer-controlled output devices and describes an ideal “kinesthetic display” that served as many senses as possible. Sutherland saw the potential for computers to simulate ideas and output not just visual information, but to produce meaningful sound and touch output as well, all while accepting and incorporating a user’s input in a self-modifying feedback loop. This was forward-thinking stuff; recall that when this document was written, computers weren’t even generating meaningful sounds of any real complexity, let alone visual displays capable of arbitrary content.

A Way To Experience The Unreal

What if we could similarly experience concepts that could not be realized in our physical world? It would be a way to gain intuitive and intimate familiarity with concepts not otherwise available to us.

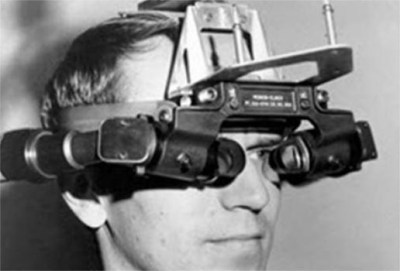

As a first step in actualizing these ideas, Sutherland and some of his students created a large ceiling-suspended system dubbed Sword of Damocles. It was the first head-mounted display whose visuals did not come from a camera, but were generated by a computer. It displayed only line-based vector graphics, but it was able to modify what it showed in real-time based on head position and user input.

Leveraging a computer’s ability to process feedback and dynamically generate visuals was key to an interactive system capable of generating its own simulated environment. For the first time, a way to meaningfully fuse interaction with abstract simulation was within reach, and there was nowhere to go but up.

Ideas From the 60s That Happened

Many concepts that Sutherland predicted have come to pass, at least partially, and are recognizable in some modern form.

Objects Displayed by a Computer Need Not Follow Ordinary Rules of Reality

Being able to define things free from the confines of physical reality, and adjust their properties at will, encompasses functions like CAD modeling and other simulation work as well as it does entertainment like gaming. In fact, it would even be fair to say that gaming in particular thrives in this space.

Sutherland envisioned a computer-controlled display as a looking glass into a mathematical wonderland. A great example of this concept is this 3D engine for non-Euclidean geometry which presents impossible geometries in a familiar, interactive way.

Tactile and Haptic Feedback

Today’s VR controllers (and mobile devices like phones, for that matter) rely heavily on being able to deliver a range of subtle vibrations as meaningful feedback. While not measuring up to Sutherland’s ideal of accurately simulating things like complex physics, it nevertheless gives users an intuitive understanding of unseen forces and boundaries, albeit simple ones, like buttons that do not exist as physical objects.

Head Tracking

Making a display change depending on where one is looking is a major feature of VR. While Sutherland only mentioned this concept briefly, accurate and low-latency tracking has turned out to be a feature of critical importance. When Valve was first investigating VR and AR, an early indicator that they were onto something was when researchers experienced what was possible when low-persistence displays were combined with high-quality tracking; it was described as looking through a window into another world.

Hand and Body Tracking, Including Gaze Tracking

Sutherland envisioned the ability of a computer to read a user’s body as an input method, particularly high-dexterity parts of the body like the hand and eyes. Hand tracking is an increasingly common feature in consumer VR systems today. Eye tracking exists, but more on that in a moment.

Some Ideas Haven’t Happened Yet

There are a number of concepts that haven’t happened, or exist only in a very limited way, but that list is shrinking. Here are the most notable standouts.

Robust Eye Tracking Is Hard

Sutherland wrote “it remains to be seen if we can use a language of glances to control a computer,” and so far that remains the case.

It turns out that eye tracking is fairly easy to get mostly right: one simply points a camera at an IR-illuminated eyeball, looks for the black circle of the pupil, and measures its position to determine where its owner is looking. No problems there, and enterprising hackers have made plenty of clever eye tracking projects.

Eye tracking gets trickier when high levels of reliable accuracy are needed, such as using it to change how visuals are rendered based on exactly where a user is looking. There are a number of reasons for this: not only does the human eye make frequent, involuntarily movements called saccades, but roughly 1% of humans have pupils that do not present as nice round black shapes, making it difficult for software to pick out. On top of that, there is a deeper problem. Because a pupil is nothing more than an opening in the flexible tissue of the iris, it is not always a consistent shape. The pupil in fact wobbles and wiggles whenever the eye moves — which is frequently — and this makes highly accurate positioning difficult to interpret. Here is a link (cued to 37:55 in) to a video presentation explaining these issues, showing why it is desirable to avoid eye tracking in certain applications.

Simulation-Accurate Force Feedback Isn’t Ready

Force feedback devices have existed for years, and there is renewed interest in force feedback thanks to VR development. But we are far from using it in the way Sutherland envisioned: to simulate and gain intuitive familiarity with complex concepts and phenomena, learning them as well as we know our own natural world.

Simulating something like a handshake or a hug ought to be simple by that metric, but force feedback that can meaningfully simulate simple physics remains the realm of expensive niche applications that can’t exist without specialized hardware.

Holodeck-level Simulation

Probably the most frequently-quoted part of The Ultimate Display is the final few sentences, in which Sutherland describes something that sounds remarkably like the holodeck from Star Trek:

The ultimate display would, of course, be a room within which the computer can control the existence of matter. A chair displayed in such a room would be good enough to sit in. Handcuffs displayed in such a room would be confining, and a bullet displayed in such a room would be fatal. With appropriate programming such a display could literally be the Wonderland into which Alice walked.

Clearly we’re nowhere near that point, but if we ever are, it might be the last thing we ever need to invent.

Important Features vs. Cool Ones

The best ideas may have come from the 60s, but we’ve learned a lot since then about what is and isn’t actually important to creating immersive experiences. Important features are the ones a technology really needs to deliver on, because they are crucial to immersion. Immersion is a kind of critical mass, a sensory “aha!” moment in which one ceases to notice the individual worky bits involved, and begins to interact seamlessly and intuitively with the content in a state of flow. Important features help that happen.

For example, it may seem that a wide field of view in an HMD is important for immersion, but that turns out to not quite be the case. We covered a fascinating presentation about how human vision uses all sorts of cues to decide how “real” something is, and what that means for HMD development. It turns out that a very wide field of view in a display is desirable, but it is not especially important for increasing immersion. Audio has similar issues, with all kinds of things being discovered as important to delivering convincing audio simulation. For example, piping sound directly into the ear canals via ear buds turns out to be a powerful way for one’s brain to classify sounds as “not real” no matter how accurately they have been simulated. Great for listening to music, less so for a convincing simulation.

For example, it may seem that a wide field of view in an HMD is important for immersion, but that turns out to not quite be the case. We covered a fascinating presentation about how human vision uses all sorts of cues to decide how “real” something is, and what that means for HMD development. It turns out that a very wide field of view in a display is desirable, but it is not especially important for increasing immersion. Audio has similar issues, with all kinds of things being discovered as important to delivering convincing audio simulation. For example, piping sound directly into the ear canals via ear buds turns out to be a powerful way for one’s brain to classify sounds as “not real” no matter how accurately they have been simulated. Great for listening to music, less so for a convincing simulation.

Another feature of critical importance is a display with robust tracking and low latency. I experienced this for myself the first time I tried flawless motion tracking on a modern VR headset. No matter how I moved or looked around, there was no perceptible lag or drift. I could almost feel my brain effortlessly slide into a groove, as though it had decided the space I was in and the things I was looking at existed entirely separate from the thing I was wearing on my head. That was something I definitely did not feel when I wore a Forte VFX1 VR headset in the mid-90s. At the time, it wasn’t the low resolution or the small field of view that bothered me, it was the drifty and vague head tracking that I remember the most. There was potential, but it’s no wonder VR didn’t bloom in the 90s.

What’s Next?

One thing that fits Sutherland’s general predictions about body tracking, but which he probably did not see coming, is face and expression tracking. It is experimental work from Facebook, but is gaining importance mainly for the purpose of interacting with other people digitally, rather than as a means of computer input.

Speaking of Facebook, a social network spearheading VR development (while tightening their grip on it) definitely was not predicted in the 60s, yet it seems to be next for VR nevertheless. But I never said the future of VR came from the 60s, just that the good ideas did.

No comments:

Post a Comment