In today’s world we are surrounded by various sources of written information, information which we generally assume to have been written by other humans. Whether this is in the form of books, blogs, news articles, forum posts, feedback on a product page or the discussions on social media and in comment sections, the assumption is that the text we’re reading has been written by another person. However, over the years this assumption has become ever more likely to be false, most recently due to large language models (LLMs) such as GPT-2 and GPT-3 that can churn out plausible paragraphs on just about any topic when requested.

This raises the question of whether we are we about to reach a point where we can no longer be reasonably certain that an online comment, a news article, or even entire books and film scripts weren’t churned out by an algorithm, or perhaps even where an online chat with a new sizzling match turns out to be just you getting it on with an unfeeling collection of code that was trained and tweaked for maximum engagement with customers. (Editor’s note: no, we’re not playing that game here.)

As such machine-generated content and interactions begin to play an ever bigger role, it raises both the question of how you can detect such generated content, as well as whether it matters that the content was generated by an algorithm instead of by a human being.

Tedium Versus Malice

In George Orwell’s Nineteen Eighty-Four, Winston Smith describes a department within the Ministry of Truth called the Fiction Department, where machines are constantly churning out freshly generated novels based around certain themes. Meanwhile in the Music Department, new music is being generated by another system called a versificator.

Yet as dystopian as this fictional world is, this machine-generated content is essentially harmless, as Winston remarks later in the book, when he observes a woman in the prole area of the city singing the latest ditty, adding her own emotional intensity to a love song that was spat out by an unfeeling, unthinking machine. This brings us to the most common use of machine-generated content, which many would argue is merely a form of automation.

The encompassing term here is ‘automated journalism‘, and has been in use with respected journalistic outlets like Reuters, AP and others for years now. The use cases here are simple and straightforward: these are systems that are configured to take in information on stock performance, on company quarterly reports, on sport match outcomes or those of local elections and churn out an article following a preset pattern. The obvious advantage is that rooms full of journalists tediously copying scores and performance metrics into article templates can be replaced by a computer algorithm.

In these cases, work that involves the journalistic or artistic equivalent of flipping burgers at a fast food joint is replaced by an algorithm that never gets bored or distracted, while the humans can do more intellectually challenging work. Few would argue that there is a problem with this kind of automation, as it basically does exactly what we were promised it would do.

Where things get shady is when it is used for nefarious purposes, such as to draw in search traffic with machine-generated articles that try to sell the reader something. Although this has recently led to considerable outrage in the case of CNET, the fact of the matter is that this is an incredibly profitable approach, so we may see more of it in the future. After all, a large language model can generate a whole stack of articles in the time it takes a human writer to put down a few paragraphs of text.

More of a grey zone is where it concerns assisting a human writer, which is becoming an issue in the world of scientific publishing, as recently covered by The Guardian, who themselves pulled a bit of a stunt in September of 2020 when they published an article that had been generated by the GPT-3 LLM. The caveat there was that it wasn’t the straight output from the LLM, but what a human editor had puzzled together from multiple outputs generated by GPT-3. This is rather indicative of how LLMs are generally used, and hints at some of their biggest weaknesses.

No Wrong Answers

At its core an LLM like GPT-3 is a heavily interconnected database of values that was generated from input texts that form the training data set. In the case of GPT-3 this makes for a database (model) that’s about 800 GB in size. In order to search in this database, a query string is provided – generally as a question or leading phrase – which after processing forms the input to a curve fitting algorithm. Essentially this determines the probability of the input query being related to a section of the model.

Once a probable match has been found, output can be generated based on what is the most likely next connection within the model’s database. This allows for an LLM to find specific information within a large dataset and to create theoretically infinitely long texts. What it cannot do, however, is to determine whether the input query makes sense, or whether the output it generates makes logical sense. All the algorithm can determine is whether it follows the most likely course, with possibly some induced variation to mix up the output.

Something which is still regarded as an issue with LLM-generated texts is repetition, though this can be resolved with some tweaks that give the output a ‘memory’ to cut down on the number of times that a specific word is used. What is harder to resolve is the absolute confidence of LLM output, as it has no way to ascertain whether it’s just producing nonsense and will happily keep on babbling.

Yet despite this, when human subjects are subjected to GPT-3- and GPT-2-generated texts as in a 2021 study by Elizabeth Clark et al., the likelihood of them recognizing texts generated by these LLMs – even after some training – doesn’t exceed 55%, making it roughly akin to pure chance. Just why is it that humans are so terrible at recognizing these LLM-generated texts, and can perhaps computers help us here?

Statistics Versus Intuition

When a human being is asked whether a given text was created by a human or generated by a machine, they’re likely to essentially guess based on their own experiences, a ‘gut feeling’ and possibly a range of clues. In a 2019 paper by Sebastian Gehrmann et al., a statistical approach to detecting machine-generated text is proposed, in addition to identifying a range of nefarious instances of auto-generated text. These include fake comments in opposition to US net neutrality and misleading reviews.

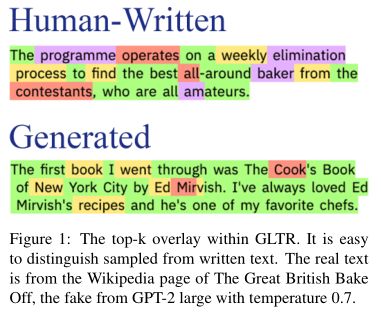

The statistical approach detailed by Gehrmann et al. is called Giant Language model Test Room (GLTR, GitHub source) involves analyzing a given text for its predictability. This is a characteristic that is often described by readers as ‘shallowness’ of a machine-generated text, in that it keeps waffling on for paragraphs without really saying much. With a tool like GLTR such a text would light up mostly green in the visual representation, as it uses a limited and predictable vocabulary.

In a paper presented by Daphne Ippolito et al. (PDF) at the 2020 meeting of the Association for Computational Linguistics, the various approaches to detecting machine-generated text are covered, along with the effectiveness of these methods used in isolation versus in a combined fashion. The top-k analysis approach used by GLTR is included in these methods, with the alternate approaches of nucleus sampling (top-p) and others also addressed.

Ultimately, in this study the human subjects scored a median of 74% when classifying GPT-2 texts, with the automated discriminator system generally scoring better. Of note is the study by Ari Holtzman et al. that is referenced in the conclusion, in which it is noted that human-written text generally has a cadence that dips in and out of a low probability zone. This not only makes what makes a text interesting to read, but also provides a clue to what makes text seem natural to a human reader.

With modern LLMs like GPT-3, an approach like the nucleus sampling proposed by Holtzman et al. is what provides the more natural cadence that would be expected from a text written by a human. Rather than picking from a top-k list of options, instead one selects from a dynamically resized pool of candidates: the probability mass. The resulting list of options, top-p, then provides a much richer output than with the top-k approach that was used with GPT-2 and kin.

What this also means is that in the automatic analysis of a text, multiple approaches must be considered. For the analysis by a human reader, the distinction between a top-k (GPT-2) and top-p (GPT-3) text would be stark, with the latter type likely to be identified as being written by a human.

Uncertain Times

It would thus seem that the answer to the question of whether a given text was generated by a human or not is a definitive ‘maybe’. Although statistical analysis can provide some hints as to the likelihood of a text having being generated by an LLM, ultimately the final judgement would have to be with a human, who can not only determine whether the text passes muster semantically and contextually, but also check the presumed source of a text for being genuine.

Naturally, there are plenty of situations where it may not matter who wrote a text, as long as the information in it is factually correct. Yet when there’s possibly nefarious intent, or the intent to deceive, it bears to practice due diligence. Even with auto-detecting algorithms in place, and with a trained and cautious user, the onus remains on the reader to cross-reference information and ascertain whether a statement made by a random account on social media might be genuine.

(Editor’s Note: This post about OpenAI’s attempt to detect its own prose came out between this article being written and published. Their results aren’t that great, and as with everything from “Open”AI, their methods aren’t publicly disclosed. You can try the classifier out, though.)

No comments:

Post a Comment