If you’re gonna be a hacker eventually you’re gonna have to write code. And if you write code eventually you’re gonna have to deal with concurrency. Concurrency is what we call it when parts of our program run at the same time. That could be because of something fairly straightforward, like multiple threads, or multiple processes; or something a little more complicated such as event loops, asynchronous or non-blocking I/O, interrupts and signal handlers, re-entrancy, co-routines / fibers / green threads, job queues, DMA and hardware level concurrency, speculative or out-of-order execution at CPU-level, time-sharing on single-core systems, or parallel execution on multi-core systems. There are just so many ways to get tied up with concurrency.

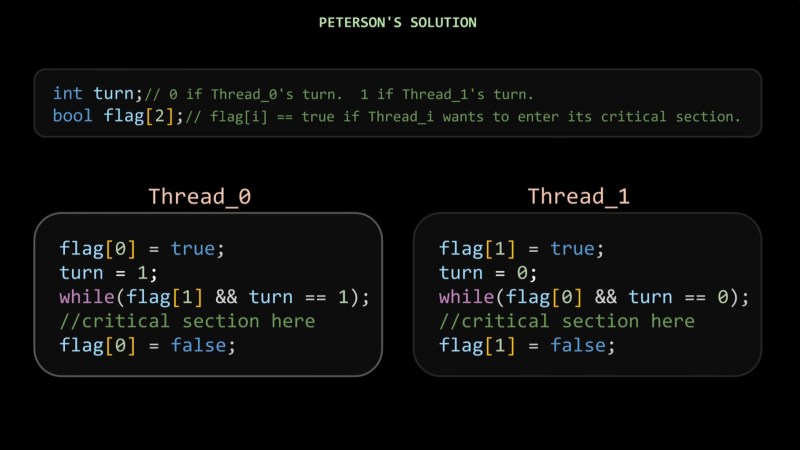

In this video from [Core Dumped] we learn about The ’80s Algorithm to Avoid Race Conditions (and Why It Failed). This video explains what a race condition looks like and talks through what the critical section is and approaches to protecting it. This video introduces an old approach to protect the critical section first invented in 1981 known as Peterson’s solution, but then goes on to explain how Peterson’s solution is no longer reliable as much has changed since the 1980s, particularly compilers will reorganize instructions and CPUs may run code out of order. So there is no free lunch and if you have to deal with concurrency you’re going to want some kind of support for a mutex of some type. Your programming language and its standard library probably have various types of locks available and if not you can use something like flock (also available as a syscall, to complement the POSIX fnctl), which may be available on your platform.

If you’re interested in contemporary takes on concurrency you might like to read Amiga, Interrupted: A Fresh Take On Amiga OS or The Linux Scheduler And How It Handles More Cores.

No comments:

Post a Comment